Dear all,

The recently approved EU-US Data Privacy Framework is about to undergo the same legal battle as its predecessors starting in September. In other news, OpenAI filed a trademark application for GPT-5 (we raised our eyebrows too), and Zoom is under fire for data processing practices related to training AI models and use of user content. Google’s antitrust case in Italy over data portability has been settled, but the US Justice Department’s case will go to trial next month (we’ll cover this one in upcoming digests).

Let’s get started.

Stephanie and the Digital Watch team

PS. We’re taking a short break next week; expect us back in a fortnight.

// HIGHLIGHT //

Schrems III: EU-US privacy framework to be challenged in court in September

A legal challenge to the recently approved EU-US Trans-Atlantic Data Privacy Framework (TADPF) is expected to be filed by Austrian privacy activist Max Schrems, chairman of NOYB (European Center for Digital Rights, called NOYB for None Of Your Business) in September.

The new framework, which governs the transfer of European citizens’ personal data across the Atlantic, was finalised by the European Commission and the US government last month. Known as the TADPF on Twit…sorry, X, the framework is actually the third of its kind, succeeding the invalidated Safe Harbour in October 2015 and the Privacy Shield in July 2020. Notably, it was Max Schrems who played a significant role in invalidating both frameworks, earning the distinctive labels Schrems I and Schrems II for each case.

NOYB had already announced its plans to challenge the new framework a few weeks ago, which it says is essentially a copy of the failed Privacy Shield.

Issue #1: Surveillance on non-US individuals

The fundamental problem with the new framework, much like the previous versions, has to do largely with a US law: Section 702 of the Foreign Intelligence Surveillance Act (FISA), which allows for surveillance against non-US individuals. Although the US 4th Amendment protects the privacy of American citizens, European citizens have no constitutional rights in the USA. Therefore they cannot defend themselves from FISA 702 in the same way.

At the same time, in the EU, personal data may only leave the EU if adequate protection is ensured. So what the USA and EU agreed to, for the EU to green-light data transfers under the new framework, was to limit bulk surveillance to ‘what is necessary and proportionate’ and share a common understanding of what ‘proportionate’ means without actually undermining the powers that US authorities wield.

Issue #2: The redress mechanism

The previous framework for citizens seeking redress through the ombudsperson did not align with European law. The new agreement introduces changes by establishing a Civil Liberties Protection Officer and a body referred to as a court (which NOYB thinks is simply a semi-independent executive entity).

Although there are some minor enhancements compared to the ombudsperson, individuals will probably have no direct interaction with the new bodies, so the outcomes of seeking redress will be similar to those that the former Ombudsperson could have reached.

On the path to Schrems III

The system needs to be implemented by companies, so that it can be challenged by a person whose data is transferred under the new instrument. Schrems indicated the lawsuit will be filed in Austria, his home country.

Then, it is hoped that the Austrian court will quickly decide to accept or reject, this challenge, and send it to the Court of Justice of the European Union (CJEU).

Is there any chance that this trajectory might be avoided? Yes, but it’s unlikely. FISA 702 has a sunset clause, which means that it needs to be re-authorised by the US Congress by the end of 2023. The new litigation will add further pressure to existing calls for reforming FISA 702, but Schrems himself thinks the US government may not be willing to reauthorise or reform FISA 702, since the framework has now been agreed.

As the Schrems III litigation unfolds, it is increasingly probable that the case will end up before the CJEU, where Schrems has strong confidence in the outcome: ‘Just announcing that something is “new”, “robust” or “effective” does not cut it before the Court of Justice.’

// AI GOVERNANCE //

OpenAI files trademark application for GPT-5

OpenAI has filed a trademark application for GPT-5 at the US Patent and Trademark Office, aiming to cover various aspects such as AI-generated text, neural network software, and related services. While the filing was spotted by a trademark attorney (who tweeted about it), there has been no official confirmation from OpenAI about GPT-5.

A trademark application doesn’t always mean a working product is in the making. Often, companies file trademarks to stay ahead of competitors or protect their intellectual property.

Why is it relevant? OpenAI CEO Sam Altman recently denied that the company was working on GPT-5. During an event at MIT, Altman reacted to an open letter requesting a pause in the development of AI systems more powerful than GPT-4. Altman clarified that the letter lacked technical nuance and mistakenly stated that OpenAI is currently training GPT-5, deeming it ‘sort of silly’. (Jump to minute 16’00 to listen to the recording). Time will tell.

Zoom under fire for training AI models with user data without opt-out option

Zoom’s latest update to its Terms of Service will allow it to leverage user data for machine learning and AI, without providing users the possibility of opting out.

In addition, Section 10.4 of the updated terms also grants Zoom a ‘perpetual, worldwide, non-exclusive, royalty-free, sublicensable, and transferable license’ to use customer content in any way it likes.

Why is it relevant? First, it gives Zoom a sweeping range of powers over people’s content (the argument that users should read the terms and conditions will not earn Zoom any kudos from users nor alleviate their concerns). Second, the Zoom case echoes one of the earliest legal challenges that OpenAI faced when the Italian data protection authority banned ChatGPT from Italy, and later allowed it to operate after OpenAI ‘granted all individuals in Europe, including non-users, the right to opt-out from processing of their data for training of algorithms also by way of an online, easily accessible ad-hoc form’. But there was one main difference: OpenAI uses legitimate interest as a basis for using data to train its models, which means it needs an opt-out form. Zoom users can enable generative AI features, but as yet, there is no clear way to opt out.

UPDATE (8 August 2023): Zoom updated its terms of service in the evening of 7 August (right after this issue was published) to say that ‘Notwithstanding the above, Zoom will not use audio, video or chat Customer Content to train our artificial intelligence models without your consent’. However, it’s unlikely that this will alleviate concerns: First, the term ‘customer content’ does not cover all the content that Zoom will use to train its AI models; second, it’s still unclear whether Zoom is seeking to obtain users’ consent (in Europe) in accordance with GDPR requirements; third, there’s still no possibility to opt out – at least, not a straightforward one; fourth, there’s no change to the sweeping powers Zoom has given itself over user content (Section 10.4).

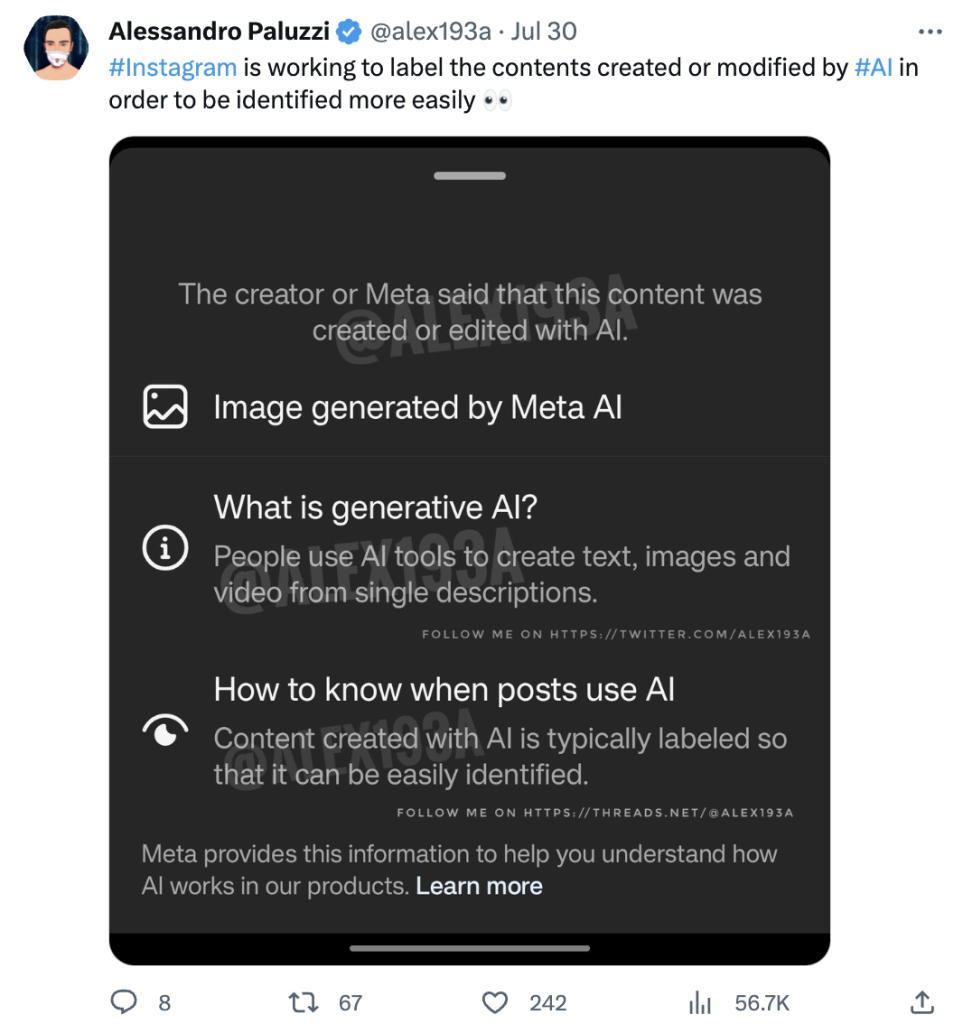

Are labels for AI-generated content around the corner?

Alessandro Paluzzi, a mobile developer and self-proclaimed leaker, has disclosed that Instagram is developing a label specifically for AI-generated content. As companies vie for dominance in generative AI technology, the introduction of content labels thrusts them into a race on combating misinformation. The tool that successfully and accurately labels AI content could earn the trust of users and governments.

UK labels AI as chronic risk

AI has now been officially classified, for the first time, as a security threat to the UK, as stated in the recently published National Risk Register 2023. This level of risk falls into the category of chronic risks, different from acute risks because they present ongoing challenges that gradually undermine our economy, community, way of life, and national security. While chronic risks typically unfold over an extended time, they are not limited to such.

The advancements in AI systems and their capabilities entail various implications, including both chronic and acute risks. For instance, they could facilitate the proliferation of harmful misinformation and disinformation. If mishandled, these risks could have significant consequences.

Why is this relevant? The UK recently announced it will host the first global summit on AI Safety, bringing together key countries, leading tech companies, and researchers to agree (hopefully) safety measures to evaluate and monitor risks from AI. The UK also recently chaired the UN Security Council’s first-ever debate on AI.

Was this newsletter forwarded to you, and you’d like to see more?

// CRYPTO-BIOMETRICS //

WorldCoin wants to attract governments; Kenya suspends project

Tools For Humanity, the San Francisco and Berlin-based company behind WorldCoin, the new crypto-biometric project we wrote about last week, hopes it will attract governments to use it.

Ricardo Macieira, general manager for Europe at Tools For Humanity, said the company’s idea is to build the infrastructure for others to use it.

Why is this relevant? The project is already shrouded in controversy over Worldcoin’s data collection processes, not least because of the crypto-for-iris scans method of encouraging sign-ups. Kenya is the latest country to investigate the project, and has suspended local activities of WorldCoin in the meantime.

// KIDS //

China proposes screen time limits for kids

The Cyberspace Administration of China (CAC) released draft guidelines for the introduction of screen time software to curb the problem of smartphone addiction among minors and the impact the government says screen time has on children’s academic performance, social skills, and overall well-being. The regulations mandate curfew and time limits by age, as well as age-appropriate content.

The draft rules also provide for anti-bypass functions, such as restoring factory settings if the device is not used according to the rules.

Why is it relevant? The guidelines, which are an add-on to previous regulations that restrict the amount of time under-18s spend online, give parents much of the management responsibility. This makes the widespread enforcement of the rules questionable – which we’re pretty sure is what kids in China are hoping for.

// COMPETITION //

Italian consumer watchdog closes Google’s data portability investigation

Italy’s Autorità Garante della Concorrenza e del Mercato (AGCM) has accepted commitments proposed by Google, ending its investigation over the alleged abuse of its dominant position in the user data portability market. Data portability, governed by the GDPR, allows users to move their data between services, creating competition for companies like Google.

Google presented three commitments: The first two offer supplementary solutions to Takeout, which helps users backup their data, making it easier to export to third-party operators. The third commitment allows testing of a new solution that enables direct data portability between services, with authorisation from users. This aims to improve interoperability within the Google ecosystem.

Why is it relevant? First, amid the multitude of antitrust cases faced by the company worldwide, this particular one had the potential to escalate further, but reached its resolution here. Second, the benefits of this outcome extend beyond just Italian users.

More as a reminder, since we covered these events last week. It will be a quiet month. Happy August!

10–13 Aug: DEF CON 31 is the Las Vegas event which will show, among other workshops, training, and contests, the White House-backed red-teaming of OpenAI’s models.

21 August–1 September: The Ad Hoc Committee on Cybercrime meets in New York for its 6th session.

25 August: Very large online platforms and search engines must start abiding by the DSA’s obligations.

WSJ: How Binance transacts billions in Chinese market despite ban

It seems that cryptocurrency exchange Binance continues to operate in China despite the country’s ban on cryptocurrencies. Binance reportedly does around $90 billion worth of business in China, one of its largest markets. An investigative article from the Wall Street Journal explores how Binance is able to operate in China despite the ban and the potential risks associated with doing so.

Was this newsletter forwarded to you, and you’d like to see more?